Salient Features for Moving Object Detection in Adverse Weather Conditions during Night Time

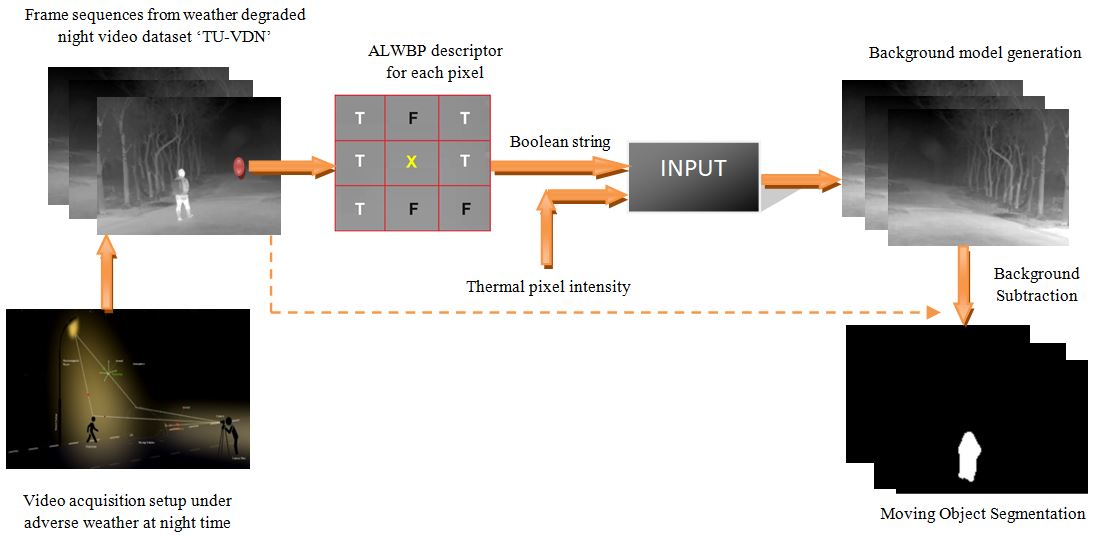

Foreground segmentation of moving objects in adverse atmospheric conditions such as fog, rain, low light and dust is a challenging task in computer vision. The advantages of thermal infrared imaging at night time under adverse atmospheric conditions have been demonstrated, which are due to the long wavelength. However, existing state-of-the-art object detection techniques have not been useful in such scenarios. We propose an improved background model that utilizes both thermal pixel intensity features and spatial video salient features. The proposed spatial video salient features are represented as an Akin-based per-pixel Boolean string over a local region block, and depend on the effect of neighbouring pixels on a centre pixel. The result of this Boolean procedure is referred to as the - ‘Akin-based Local Whitening Boolean Pattern (ALWBP),’ which differentiates foreground and background region accurately, even against a cluttered background. The background model is controlled via (i) the automatic adaptation of parameters such as the decision threshold RT and, learning parameter L, and (ii) the updating of background samples Bsample_int and,- Bsample_ALWBP to minimize (a) the effect of the background dynamics of outdoor scenes, and (b) the temperature polarity changes during the maiden appearance of a moving object in thermal frame sequences. The performance of this model is evaluated using nine existing standard segmentation performance metrics on our newly created -‘Tripura University Video Dataset at Night time (TU-VDN)’ and on the publicly available CDnet-2014 dataset. Our newly created weather-degraded video dataset, namely, TU-VDN, consists of sixty video sequences that represent four atmospheric conditions, namely, low light, dust, rain, and fog. The results of a performance comparison with fourteen state-of-the-art detection techniques also demonstrate the high accuracy of the proposed technique.

Publications

"Novel Deeper AWRDNet: Adverse Weather-Affected Night Scene Restorator Cum Detector Net for Accurate Object Detection",

A. Singha, M.K. Bhowmik

Neural Computing and Applications, Springer

"Salient Features for Moving Object Detection in Adverse Weather Conditions during Night Time",

A. Singha, M.K. Bhowmik

IEEE Transactions on Circuits and Systems for Video Technology (TCSVT), IEEE

DOI: 10.1109/TCSVT.2019.2926164, 2019.

[PDF]

"TU-VDN: Tripura University Video Dataset at Night Time in Degraded Atmospheric Outdoor Conditions for Moving Object Detection",

A. Singha, M.K. Bhowmik

IEEE International Conference on Image Processing (ICIP), IEEE

DOI: 10.1109/ICIP.2019.8804411, 2019.

[PDF]

Patents

"System and Method for Detecting Object in Adverse Atmosphere by Restoring Degraded Image in Deep Convolutional Layer",

A. Singha, Sourav Dey Roy, M.K. Bhowmik

New Indian Patent Application No: 202131002651

Published on: July 22, 2022

[PDF]

| Images |

|

|

TU-VDN Samples:

Sample frames of the created dataset at night time (a), (b) a visual frame and the corresponding thermal frame, respectively, under low-light conditions; (c), (d) a visual frame and the corresponding thermal frame, respectively, under dust conditions; (e), (f) a visual frame and the corresponding thermal frame, respectively, under rain conditions; and (g), (h) a visual frame and the corresponding thermal frame, respectively, under fog conditions. To characterize the textures in night time visual and night time thermal images, we have used entropy to measure the contents, where a higher entropy value in night time thermal frames indicates an image with adequate details of information in terms of better quality.

|

|

|

|

TU-VDN Statistics:

The TU-VDN dataset provides a realistic diverse set of outdoor videos in night vision that were captured via a thermal modality. The current dataset consists of 60 video sequences that were captured under various atmospheric conditions; the key challenges of the video clips are listed in Table II. Each video clip is 2 minutes in duration and was recorded with an FLIR camera that was rigidly mounted with 90o alignments on a tripod stand by maintaining 200m to 2km distances from objects. In contrast, for a motion background, the video is captured by mounting the camera on a moving vehicle (20~30 km/h) such that the objects, camera, and background are moving simultaneously.

|

|

|

|

Flat Cluttered Background:

Outline of the salient-feature-based methodology over a flat cluttered background. (a) Background flat region. Each neighbouring pixel similarity pattern (Bs) is computed using the center pixel (marked as ‘x’); (b) Foreground object flat region. The foreground string (Fs) has 6/8 matches with the background similarity string (Bs), which could be categorized as background (incorrectly); (c) Foreground object flat region. The ALWBP descriptor (As) is computed using a randomly selected background sample (marked as ‘√’) as a reference center pixel. The foreground string (As) has 3/8 matches with the background string (Bs), which is categorized as foreground (correctly).

|

|

|

|

Background Model via ALWBP (BM ALWBP)

The overall system pipeline of the proposed background segmentation method is the combination of an ALWBP feature descriptor and a background model generation. Background model generation collectively represents a generation of background model where both spatial-level and pixel-level features are represented as ALWBP Boolean patterns and thermal intensities respectively as inputs. It is consist of three sub steps: decision for segmentation, adaptation of parameters and updating the background samples.

|

|

|

|

Temperature Polarity Changes:

Thermal intensity changes upon the first appearance of an object in (a) a thermal frame and (b) the next frame in which the object enters for the first time. To account for changes in the background, such as thermal intensity changes upon the first appearance of an object in the frame, a waving water layer, and shaking trees, updating of the background pixels in the background model is essential.

|

|

|

|

Background Dynamics:

Outdoor scenes are affected by movement in the background, e.g., due to waves or swaying tree leaves. In figure, higher background dynamics (dmin) require faster threshold increments in the decision threshold (RT) and the thresholds gradually decrease for in low background dynamic values.

|

|

|

|

Experimental Results:

Typical segmentation results for several key challenges under various atmospheric conditions in our created night time dataset. Row (1) shows input frames, row (2) shows the ground truth, row (3) shows the BMUALWBP results, row (4) shows the ViBe results, row (5) shows the Subsense results, row (6) shows the LOBSTER results, row (7) shows the PAWCS results, row (8) shows the FST results, row (9) shows the PBAS results, row (10) shows the Multicue results, row (11) shows the ISBM results, row (12) shows the MTD results, row (13) shows the VuMeter results, row (14) shows the KDE results, row (15) shows the MoG_V2 results, row (16) shows the Eigenbackground results, and row (17) shows the Codebook results.

|

Slides

Poster Presentation at ICIP 2019 Conference

TCSVT Slides